Data-driven Graph Filter based Graph Convolutional Neural Network Approach for Network-Level Multi-Step Traffic Prediction

Transportation Research Board 101st Annual Meeting (TRB), 2022

Lei Lin1, Weizi Li2, and Lei Zhu3

1University of Rochester

2University of Memphis

3University of North Carolina at Charlotte

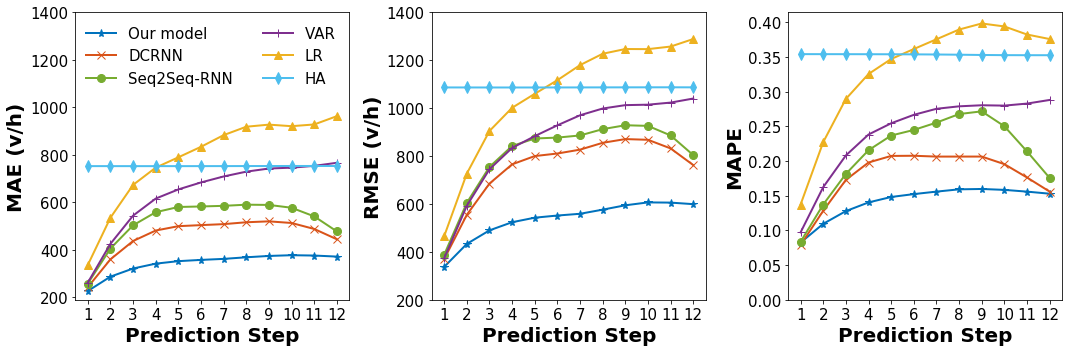

Temporal prediction performance (errors) of 6 models (1 step = 1 hour)

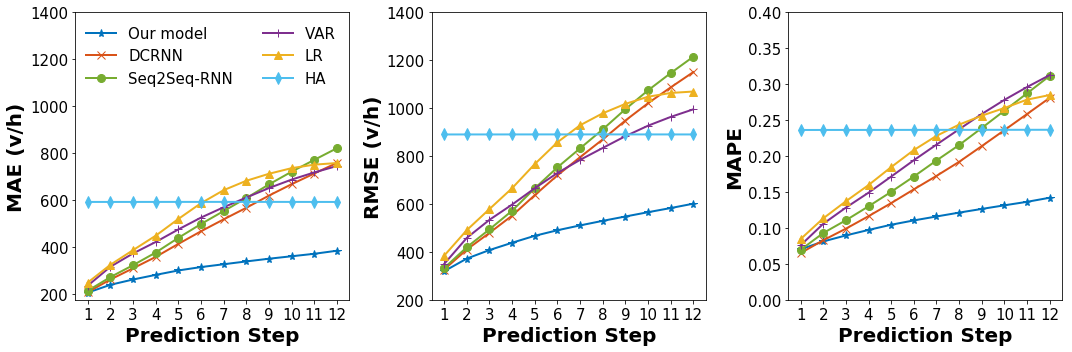

Temporal prediction performance (errors) of 6 models (1 step = 15 minutes)

Abstract

Accurate prediction of network-wide traffic conditions is essential for intelligent transportation systems. In the last decade, machine learning techniques have been widely used for this task, resulting in state-of-the-art performance. We propose a novel deep learning model, Graph Convolutional Gated Recurrent Neural Network (GCGRNN), to predict network-wide, multi-step traffic volume. GCGRNN can automatically capture spatial correlations between traffic sensors and temporal dependencies in historical traffic data. We have evaluated our model using two traffic datasets extracted from 150 sensors in Los Angeles, California, at the time resolutions one hour and 15 minutes, respectively. The results show that our model outperforms the other five benchmark models in terms of prediction accuracy. For instance, our model reduces MAE by 25.3%, RMSE by 29.2%, and MAPE by 20.2%, compared to the state-of-the-art Diffusion Convolutional Recurrent Neural Network (DCRNN) model using the hourly dataset. Our model also achieves faster training than DCRNN by up to 52%.

Links

Citation

@InProceedings{Lin2022Network,

author = {Lei Lin and Weizi Li and Lei Zhu},

title = {Data-driven Graph Filter based Graph Convolutional Neural Network Approach for Network-Level Multi-Step Traffic Prediction},

booktitle = {Transportation Research Board 101th Annual Meeting (TRB)},

year = {2022}

}

Contact

Lei Lin (lei.Lin@ieee.org), Weizi Li (wli@memphis.edu), and Lei Zhu (lei.zhu@uncc.edu)

Acknowledgements

The authors would like to thank the University of Memphis for providing the start-up fund.